There is always the risk of unintended consequences when adding improvements to a website, even if those improvements are search engine optimizations (SEO). One such problem might be duplicate material, which, if ignored, can lower your site’s search engine optimization (SEO) value and impact your search engine results.

This post Find And Remove Duplicate Content From Your Website will teach you how to search for duplicate material, the most prevalent reasons for its presence, and methods for eliminating it from your website.

What is duplicate content?

Duplicate content is information that may be found at more than one specific web address. As a result of duplicate material, search engines are confused as to which URL to prioritize. So, they may rank both URLs lower and give favor to other websites.

Why prevent duplicate content on your site?

You should expect a drop in search engine results if you use duplicate material. If search engines can’t tell which page to recommend, that’s a problem. As a result, any pages flagged as duplicates by those search engines run the danger of being penalized in search results. The best-case scenario is just that. You might potentially face a manual action from Google for trying to deceive visitors if your duplicate content concerns are severe, for example, if you have fragile material mixed with word-for-word duplicated information. A good amount of original material on every page is essential if you want your content to rise in search engine rankings.

However, this isn’t only an issue for SERPs. Having people looking for specific content become frustrated because they can’t locate it on your site is a sure sign that you’re doing something wrong. Therefore, addressing your duplicate content concerns is crucial for user experience and search engine optimization.

1. Impact on SEO and Rankings

Because duplicate content confuses search engine crawlers, the website’s rankings, link equity, and page authority may be divided over many URLs. This occurs because search engine bots are not required to consistently utilize the same URL when determining a page’s relevance to a given query; therefore, the results may vary. This results in a disparity in the number of links, page authority, and ranking strength between the various URL severities.

2. Causes of duplicate content

In reality, there are a plethora of causes for content to appear more than once. Most of them are technical; it’s not uncommon for a human being to deliberately duplicate stuff without distinguishing between versions. Unless, of course, you’ve accidentally published a post you copied. However, it would likely seem strange to most people in any other context. However, there are other technical explanations for this phenomenon, and it typically occurs because programmers do not think like a browser, user, or search engine spider.

There is a wide variety of contexts in which duplicate material could be produced (mostly unintentional). When you know the potential nuances of URLs, you can more easily spot your URLs that lead to identical content.

As you find duplicate content URLs, keep an eye out for other parts of your website’s URLs that might benefit from optimization.

3. URL Variations

Session identifiers, query parameters, and case sensitivity contribute to URL variations. A duplicate page may be generated if a URL contains parameters that do not alter the page’s content.

URLs like https://wbcomdesigns.com/blog/local-seo/ and https://wbcomdesigns.com/blog/local-seo/?source=ppc are also examples of this. Both of these links take you to the same page, but they use different URLs, which creates a situation where the same material is being served twice.

Similarly, session IDs are helpful. Session IDs allow you to track user’s actions and navigation paths while they are on your site. Each page they visit has its session ID appended to its URL. Incorporating a session ID into URL results in a new address for the same page, which violates duplicate content policies.

Although rarely, a user may accidentally capitalize a letter in a URL, it’s nevertheless crucial to maintain uniformity by always using lowercase characters. It’s possible to have two identical pages on the same website: wbcomdesigns.com/blog and wbcomdesigns.com/Blog.

4. HTTP vs HTTPS and www vs non-www

Secure your website and switch to HTTPS from HTTP by installing an SSL certificate on your server. As a result, your site’s content will appear twice on both platforms. Your website’s content is also available to users who enter either “www” or “non-www” in the address bar.

These URLs all point to the same page, yet to search engine spiders, they’re all distinct locations:

https://wbcomdesigns.com http://wbcomdesigns.com

www.wbcomdesigns.com wbcomdesigns.com

All other versions should be pointed to the chosen one.

5. Scraped or Copied Content

It’s called “content scraping,” when one website “borrows” material from another. If search engines like Google cannot determine the source of duplicated information, they may give preference to the page that was stolen from your website.

Websites that sell items by publishing official manufacturer descriptions frequently suffer from content theft. Duplicate content appears when many websites selling the same product utilize the same manufacturer description.

6. Misunderstanding the concept of a URL

The website is likely to be managed by a content management system (CMS), and while there may be more than one article in the database, all of them may be accessed using different URLs. For a programmer, the article’s database ID, not its URL, serves as its definitive identifier. In contrast, a URL serves as the content’s only identity within a search engine. A developer will begin to understand the issue once you’ve explained it to them. You will be able to help them immediately after reading this article and offering the advice you’ve just learned.

How to Find Duplicate Content

There are several techniques you may use to identify duplicates on your site. Here are three no-cost approaches to tracking down duplicate material, monitoring whether pages utilize numerous URLs, and identifying the root causes of duplicates on your site. When you get rid of the duplicates, this will be helpful.

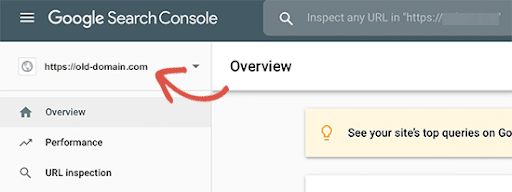

1. Google Search Console

You may use Google’s free Search Console to your advantage. By configuring Google Search Console for SEO, you may monitor the performance of your web pages in search engines. You may identify URLs that could be contributing to duplicate content problems by utilizing the Search Results tab in the Performance menu.

Watch out for the following frequent problems:

- URLs that may be accessed through both HTTP and HTTPS

- URLs with and without the “www” prefix

- You can use either a trailing slash “/” or not in a URL.

- Query parameterized and non-parameterized URLs

- Both capitalized, and lowercase URLs are acceptable.

- Searches that return several results pages

- Keep a log of the duplicate URLs you find. We will discuss potential solutions at a later time!

- “Site:” Search

You can view all of the pages that Google has indexed and might potentially rank in search results by going to Google search and entering “site:” followed by your website URL.

To see the blog, put “site:wbcomdesigns.com/blog” into Google.

There are two virtually similar Blue Frog blog sites shown. This is significant because, although these sites are not technically identical pages, they have the same title tag and meta description, leading to term cannibalization and ranking rivalry between the two pages.

2. Duplicate Content Checker

SEO Review Tools developed this free duplicate content checker to assist websites in protecting themselves against content scraping. You can get a list of external and internal URLs similar to the one you enter into their checker tool.

We used the checker and entered “https://www.wbcomdesigns.com/,” and the results were as follows:

It’s crucial to find external duplicate content. To put it another way, when another domain name “steals” your site’s content (also known as “content scraping”), you end up with external duplicate material. After finding a duplicate, you may ask Google to remove it by submitting a removal request.

Ways to Remove Duplicate Content

If you get rid of the duplicates, search engine spiders will be able to reach and index the right page. On the other hand, it’s possible that you won’t want to get rid of every last instance of identical material. You may wish to indicate to search engines which version is the primary one. Some strategies for controlling repeated text across your site are listed below.

1. Rel = “canonical” tag

Using the real = canonical tag, webmasters may inform search engine spiders that two different URLs go to the same content. Search engines will then attribute all link equity and page rank to the provided URL since they will see this as the “original” version of the content.

Remember that the duplicate page will still appear in search results even if you use the rel = canonical tag to direct search engine spiders to the correct page version to index.

When the duplicated version does not need to be erased, such as in the case of URLs with arguments or following slashes, the Rel = canonical tag might be helpful.

Take this excerpt from a Wbcomdesigns blog article as an illustration.

You can see that we have marked the original version of the page as https://wbcomdesigns.com/how-to-backup-restore/. Rather than giving the page view to the lengthy URL with the tracking parameters at the end, this redirects the search engine to the desired URL.

2. 301 Redirects

If you don’t want people to be able to visit the duplicate page, a 301 redirect is your best bet. Using a 301 redirect notifies search engines that Page A should no longer be indexed and that Page B should receive all of the associated traffic and SEO value.

Seek for the highest-performing, most-optimized page when deciding which sites to remain and which to reroute. Combining material from numerous sites fighting for the same search engine rankings into a single, more robust page creates a more relevant experience for users and is preferred by search engines.

To improve your SEO, it is recommended that you implement and make use of 301 redirects.

3. Robots Meta Noindex and Follow Tag

You may prevent a page from indexing by search engines by inserting the meta robots tag into the page’s head section of HTML. By using the meta tag “content=noindex, follow,” you may instruct search engines to follow the links on your website without having them be included in the index.

Dealing with duplicate content on different pages is a breeze with the meta robots noindex tag. The site must use pagination when there is too much information to fit on a single page, resulting in several different URLs. If you add the “noindex, follow” meta tag to your sites, search engine spiders will still index them, but they won’t be ranked.

Because of the pagination, the following is a sample of duplicated material:

As seen in the image above, the Blue Frog blog features many content-filled pages indexed by search engines. By including the robots meta tag, these pages can still be crawled but will not be indexed.

Tips to Prevent Duplicate Content

If you’re careful about the structure of your pages, you may reduce the likelihood that duplicate material will be produced. Listed below are two approaches you might take to reduce instances of content duplication:

1. Internal Linking Consistency

Building the page’s SEO value requires a proper plan for constructing internal links. Although your linking strategy should prioritize quantity over quality, you must maintain uniformity in the structure of your URLs.

If you’ve determined that www.wbcomdesigns.com/ is the most authoritative version of your homepage, then all internal links to the homepage should point to https://www.wbcomdesigns.com/ rather than https://wbcomdesigns.com/.

2. HTTP vs HTTPS

When comparing example.com with example.com/, the difference is in the last slash.

You’ll end up with duplicate material on the page if you have one internal link that utilizes a trailing slash and another that doesn’t.

3. Use a Self-Referential Canonical Tag

A self-canonical page is created when the rel=canonical meta tag is added to a page’s HTML code and links to the current URL. Using the rel=canonical tag, you may indicate to search engines that this page version should be considered the canonical version.

Sites are duplicated when their HTML code is extracted from the original and placed on another URL. The canonical status of the original page is maintained if the rel=canonical tag is contained in the HTML code, which is often replicated when a page is copied. This additional precaution will only be adequate if the content scrapers replicate that specific HTML code section.

4. self-canonical-tag

A rel=canonical tag with the URL of the homepage is included on Blue Frog’s main page. If a content scraper attempted to create a copy of the page, this would alert search engines which URL should be used instead.

Conclusion

You may begin deleting the duplicate material you don’t want and chasing the duplicate content you want once you have a firm grasp of the issues, remedies, and, in some cases, the value of duplicate content.

Your ultimate goal should be to create a site that is revered for its superior, original content and to maximize the value of that page.

Interesting Reads:

What Are The Four Main Types Of Project Management Software?