An open web crawler refers to a program or automated script that systematically browses the internet to index and retrieve information from websites. The best open web crawlers are highly efficient, customizable, and designed to handle large-scale data extraction tasks. They play a crucial role in various applications, including search engine indexing, data mining, and content aggregation. By leveraging these crawlers, users can obtain valuable insights, conduct comprehensive research, and analyze web-based information with ease.

Additionally, open web crawlers often offer robust customization options, ensuring flexibility in data extraction and facilitating the development of tailored solutions for specific use cases. With the continuous evolution of the internet and the ever-expanding volume of online content, the significance of open web crawlers in enabling efficient data retrieval and analysis is more prominent than ever. They serve as the backbone of various digital initiatives, contributing significantly to the seamless extraction and organization of online data for a diverse range of purposes, from academic research and market analysis to content curation and business intelligence.

Table of Contents

ToggleWhat is a web crawler used for?

A web crawler, often referred to as a spider or bot, is a program designed to systematically browse the internet, indexing and gathering information from websites. It plays a crucial role in search engine operations, as it collects data from various web pages to create an index used for search engine results. Web crawlers are also used for tasks like web archiving, data mining, and monitoring website changes. They enable search engines to provide relevant and up-to-date information to users, making the internet more accessible and navigable. Additionally, businesses utilize web crawlers to collect market data, monitor competitors, and gather information for various research and analytical purposes.

What are open-source web crawlers?

Open-source web crawlers are software tools that facilitate the systematic and automated extraction of data from websites. These tools are publicly accessible, allowing users to modify and customize them according to their specific requirements. They serve various purposes, such as data mining, content aggregation, and SEO analysis. Notable examples of open-source web crawlers include Scrapy, Nutch, and Heritrix.

Is it legal to crawl a website?

The legality of web crawling depends on various factors, including the website’s terms of service, the applicable laws in the jurisdiction, and the purpose of the web crawling. In general, web crawling without permission can potentially violate copyright and data protection laws, especially if the crawler accesses or collects sensitive information or causes disruption to the website’s operation.

To ensure the legality of web crawling, it is advisable to:

- Review the website’s terms of service and robots.txt file for any crawling restrictions.

- Obtain permission from the website owner or administrator before initiating any crawling activities.

- Adhere to the legal guidelines and regulations governing data scraping and privacy in your jurisdiction.

- Respect the website’s bandwidth and server load to avoid causing disruptions or server overloads.

It’s crucial to consult legal professionals or experts knowledgeable in data privacy and web scraping regulations to ensure compliance with the law when conducting web crawling activities.

Also Read: WordPress Plugins That Help In Doubling Your Website Traffic

Top 10 open-source web crawlers

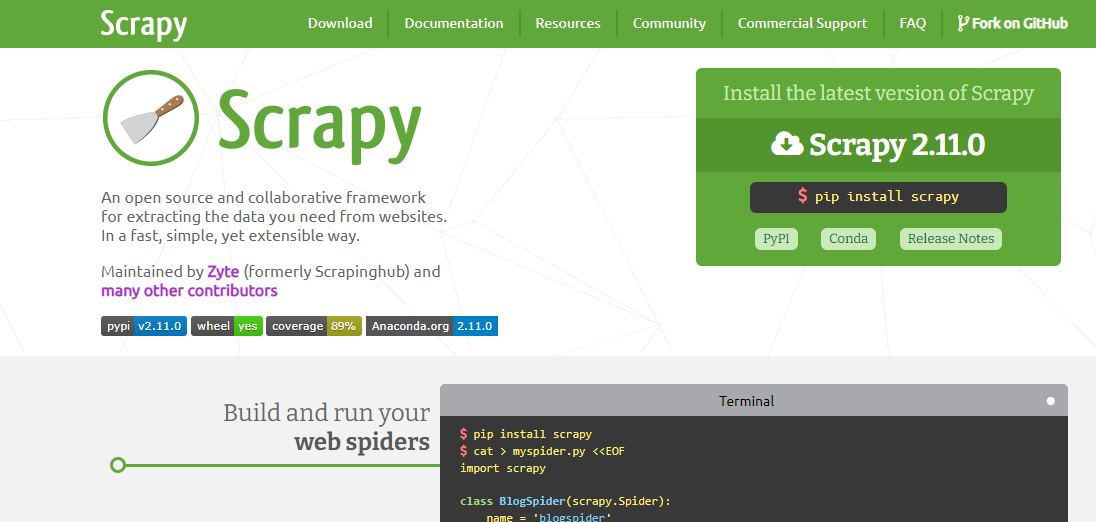

1. Scrapy

Scrapy is a widely used Python framework for web scraping and crawling. It facilitates the extraction of data from websites and offers a robust, scalable solution for various crawling needs.

Features:

- Asynchronous: Allows for efficient processing of multiple requests simultaneously.

- Extensible: Provides a framework that can be easily extended and customized for specific scraping requirements.

- Data Handling: Offers built-in support for handling data and parsing HTML/XML, simplifying the extraction process.

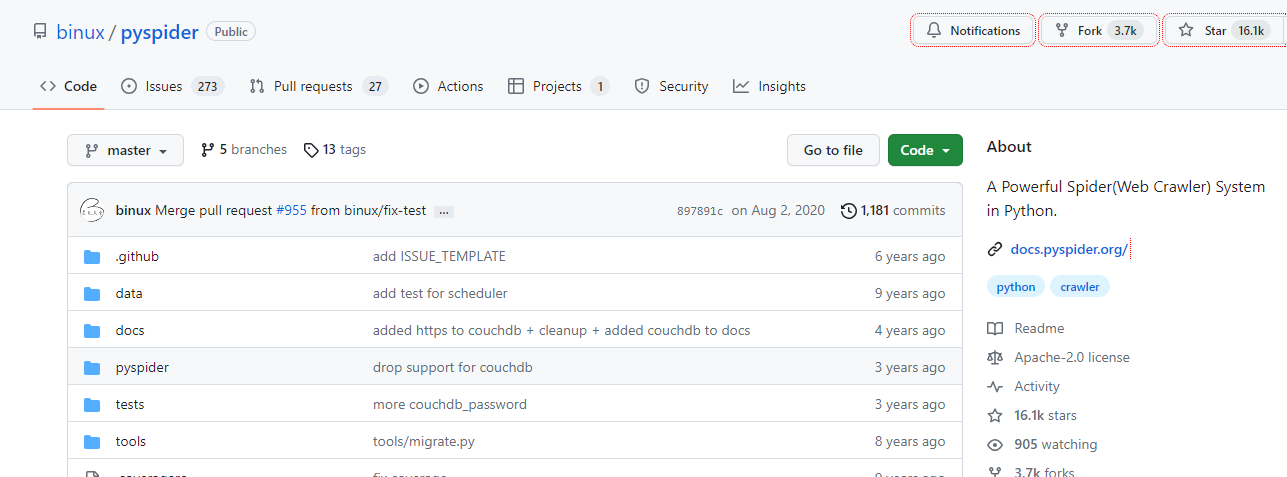

2. Pyspider

Pyspider is a powerful and easy-to-use Python-based web crawling framework. It is designed to streamline the process of web data extraction and provides a user-friendly interface for managing spider tasks.

Features:

- Distributed Crawling: Supports the distribution of crawling tasks across multiple nodes, enabling faster data extraction.

- Real-time Data Extraction: Facilitates the real-time extraction of data from websites, ensuring up-to-date information retrieval.

- Web Interface: Provides a convenient web interface for monitoring and managing spider tasks, making the process more accessible.

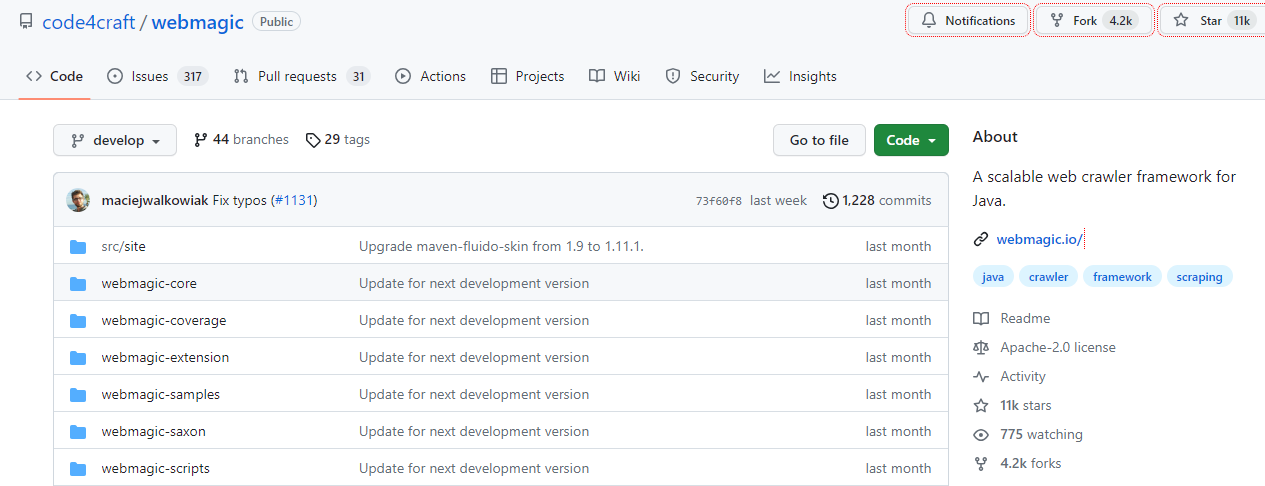

3. Webmagic

Webmagic is a Java-based web crawling framework known for its simplicity and ease of use. It provides a reliable solution for web data extraction and processing tasks.

Features:

- Multi-threading: Supports concurrent processing through multi-threading, enabling faster extraction of data from websites.

- Automatic Retries: Offers automatic retry mechanisms to handle failed requests and ensure reliable data extraction.

- Customizable Pipelines: Allows for the customization of data processing pipelines to suit specific data handling requirements.

Also Read: How To Add Contact Form In WordPress Website

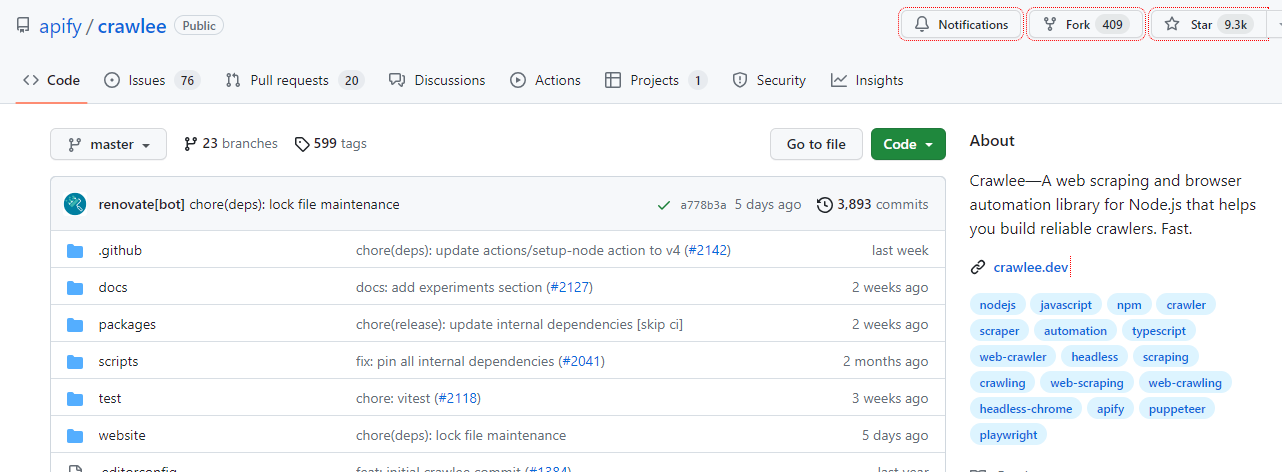

4. Crawlee

Crawlee is an open-source web crawler written in Python, designed with simplicity in mind. It provides a straightforward solution for crawling and extracting data from websites.

Features:

- Parallel Processing: Supports parallel processing for efficient and speedy data extraction from multiple sources.

- User-agent Rotation: Allows for the rotation of user-agents to prevent bot detection and ensure uninterrupted crawling.

- Pause and Resume: Provides the capability to pause and resume crawling tasks, ensuring flexibility in managing the extraction process.

Also Read: Generative AI Tools For Better Productivity (workspace)

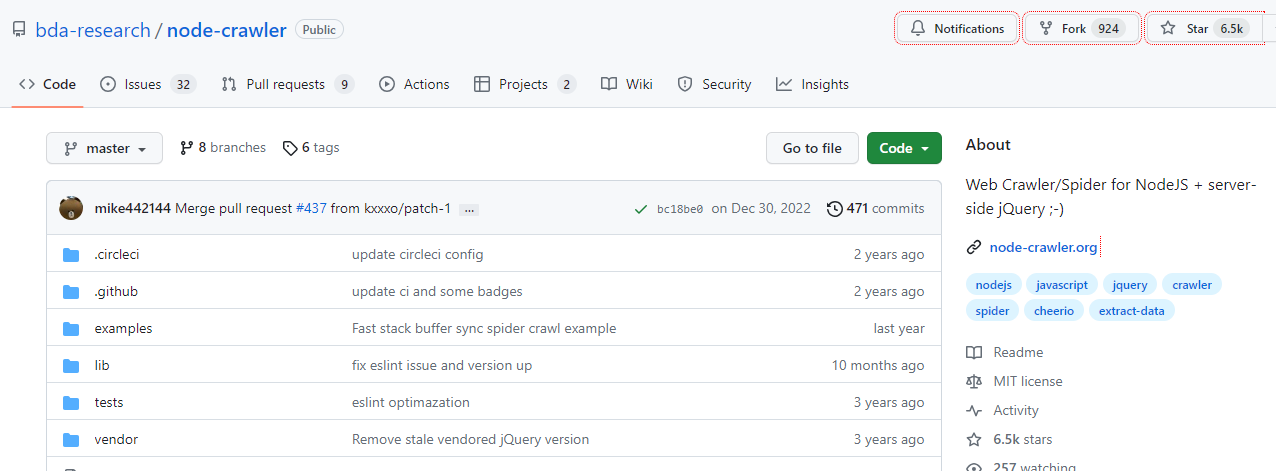

5. Node Crawler

Node Crawler is a JavaScript-based web crawling and scraping framework known for its flexibility and robust capabilities. It offers an efficient solution for handling complex websites and data extraction tasks.

Features:

- Flexible Configuration: Provides flexible configuration options to adapt to various website structures and data extraction requirements.

- Proxy Rotation: Supports proxy rotation to ensure anonymity and prevent IP blocking during the crawling process.

- Complex Website Handling: Capable of efficiently handling complex websites and navigating through intricate web structures for data extraction.

6. Beautiful Soup

Beautiful Soup is a popular Python library used for web scraping, capable of parsing HTML and XML documents easily and efficiently.

Features: It provides a simple and Pythonic way to navigate, search, and modify the parsed data. Beautiful Soup facilitates tasks such as scraping information from web pages, extracting specific data based on HTML tags, and manipulating the HTML structure.

Also Read: Accelerating the Transition from Paper to Digital Processing with Intelligent Software

7. Nokogiri

Nokogiri is a widely-used Ruby gem that enables efficient parsing and working with HTML and XML documents within the Ruby programming language.

Features: It offers an intuitive and convenient way to extract and manipulate data from web pages. Nokogiri provides a robust set of tools for navigating and searching HTML/XML content, making it a popular choice for data extraction and manipulation tasks in Ruby-based projects.

8. Crawler4j

Crawler4j is an open-source Java library specifically designed for developing web crawlers and web scraping applications.

Features: It simplifies the process of building web crawlers by providing an easy-to-use interface and configuration options. Crawler4j supports politeness settings, making it suitable for respectful web scraping, and can handle various types of content, enabling users to efficiently collect and process data from websites.

Also Read: WordPress Plugins That Help In Doubling Your Website Traffic

9. MechanicalSoup

MechanicalSoup is a Python library that automates web interaction and web scraping tasks, making it easier to navigate and scrape data from websites.

Features: It simplifies form submissions, session handling, and other web scraping tasks using Python. MechanicalSoup provides a convenient way to interact with web pages, submit forms, and extract desired information, making it a valuable tool for various web automation and scraping tasks.

10. Apache Nutch

Apache Nutch is a highly extensible, Java-based open-source web crawling and data extraction framework.

Features: It is designed for large-scale web crawling and data extraction tasks, offering support for distributed crawling and integration with Hadoop for big data analysis. Apache Nutch enables users to efficiently collect, parse, and process large volumes of data from the web, making it suitable for complex and extensive data extraction projects.

Conclusion

Open-source web crawlers offer diverse solutions for data extraction and web scraping, catering to different programming languages and project requirements. With a variety of features such as extensibility, scalability, and support for handling complex web pages, these tools enable developers to efficiently gather and process data from the web. Each crawler comes with its own strengths, making it crucial for users to assess their specific needs and choose the most suitable option for their web crawling projects. Open-source web crawlers offer diverse solutions for data extraction and web scraping, catering to different programming languages and project requirements. With a variety of features such as extensibility, scalability, and support for handling complex web pages, these tools enable developers to efficiently gather and process data from the web. Each crawler comes with its own strengths, making it crucial for users to assess their specific needs and choose the most suitable option for their web crawling projects.

Frequently Asked Questions (FAQs)

An open-source web crawler is a software tool used to systematically browse the internet, collect data from various websites, and index information for analysis and retrieval purposes. It is accessible to the public and can be modified and redistributed by users.

Open-source web crawlers provide users with the flexibility to customize and modify the tool according to their specific needs. They are often cost-effective and allow for greater transparency and community collaboration.

Some popular open-source web crawlers include Scrapy, Apache Nutch, Heritrix, StormCrawler, and BeautifulSoup. These tools offer a range of functionalities and can be tailored to suit different crawling requirements.

Open-source web crawlers can extract various types of data, including text content, images, videos, URLs, metadata, and other structured or unstructured information available on web pages.

Businesses can leverage open-source web crawlers to gather market intelligence, conduct competitor analysis, monitor online trends, and collect data for research and analytics. These tools help businesses stay informed about industry developments and consumer preferences.

Interesting Reads:

5 Best AI Plugins For WordPress